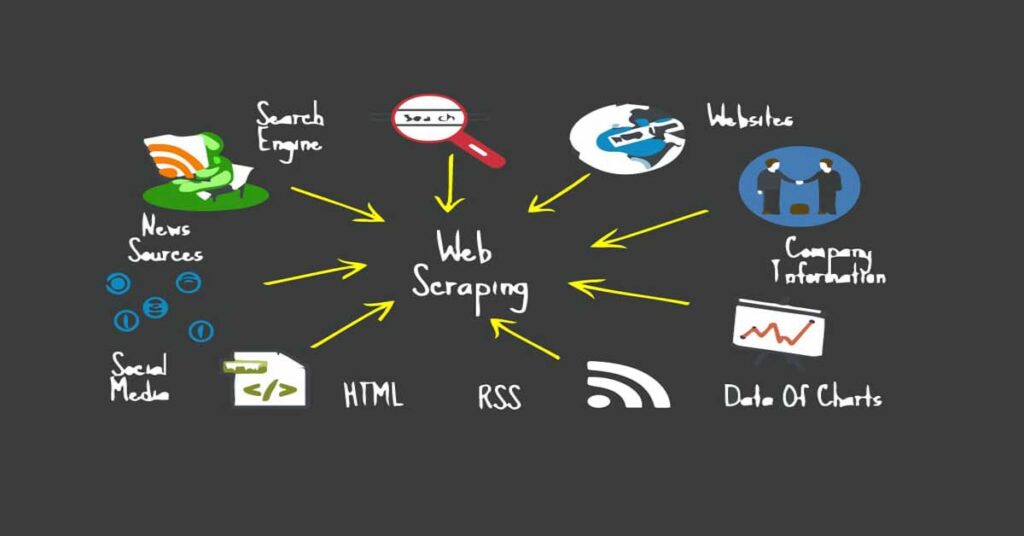

In today’s world, businesses compete against one another with extensive information collated from a multitude of users. This can turn into a complex process that demands a considerable amount of time and effort. In such an instance, web scraping brings several advantages.

Also called web harvesting, it’s an automated process that simplifies the process of data retrieval from websites. Companies use web scraping tools to serve various purposes, including lead generation, news monitoring, and price intelligence, to get a step ahead in the competition between adversaries in the long run.

In this post, we’ll take a closer look at some of the best tools available in the data scraping landscape and cover their features to help you pick the right one for your business. But before that, let’s learn the importance of having technical knowledge for data extraction.

Page Contents

Importance of Technical Knowledge/Experience for Web Scraping

Web scraping may seem straightforward, but it has a learning curve. In general, about 20% of all the websites developed are hard to scrape from. Those with zero to little technical know-how or experience face a myriad of difficulties while using web scrapers. Nowadays, for instance, many site owners set honeypot traps on a page or use anti-scraping technologies to catch scrapers.

No matter how much advanced your tool is, you will still need some practical knowledge to dodge such traps and roadblocks by writing algorithms or logical code. When you’re proficient in programming languages, including HTML, CSS, and Python, you will be able to scrape data from websites effectively.

7 Best User-friendly Web Scrapers

With numerous web scraping tools available, it can become difficult to make the right choice. Each web scraper is unique in terms of features and functionalities. So, to simplify your search, below is the list of the seven top user-friendly web scraping software that you can pick from:

OctoParse

OctoParse is an easy-to-use web data extraction software that offers cloud services to save retrieved data and IP rotation to prevent IPs from getting blacklisted. It’s a no-code web scraping tool, making it ideal for non-developers.

Features

- IP rotation

- Infinite scrolling

- Schedule scraping

- Built-in scraping templates, including Yelp and Amazon

- Data results are offered in Excel, CSV, or API formats

Mozenda

Having scraped over billions of web pages worldwide, Mozenda is a cloud-based web scraping solution that enables the extraction of unstructured web data, conversion into a structured format, and export to a cloud storage provider, such as Dropbox or Microsoft Azure. Also, it lets you control agents and data collections without manually accessing the console.

Features

- Point-and-click interface

- Request blocking

- Job sequencer for harvesting data in real-time

- Website scraping through different geographical locations

Import.io

Import.io is a web scraping tool that converts semi-structured information from web pages into structured data for various purposes, including driving business decisions, integrating with applications and other platforms using APIs and webhooks, etc. This tool comes with a user-friendly interface with a simple dashboard.

Features

- Automated web interaction and workflows

- Supports Geolocating and CAPTCHA solving

- Real-time data retrieval through JSON REST-based and streaming APIs

- Supports programming languages, including Java, JavaScript, Python, Ruby, PHP, Go, REST, NodeJS, and C#

WebHarvy

WebHarvy is a code-free web scraper that automatically crawls multiple pages and categories within sites and scrapes text, HTML, and images. In addition to handling navigation and pagination, WebHarvy provides support for proxies that enable you to scrape data anonymously without getting your IP blocked.

Features

- Easy-to-use, intuitive UI

- Schedule scraping without user intervention

- Intelligent pattern detection

- Supports file formats like CSV, XML, JSON, and Excel

Apify

Apify is one of the most robust no-code web scraping platforms used to build an API for sites, with integrated datacenter and residential proxies optimized for data retrieval. Its store has ready-made scrapers for websites like Facebook, Twitter, Instagram, and Google Maps.

Features

- Easy data extraction from Amazon

- Supports data retention, shared datacenter IPs, and ready-made browser tools

- Rich developer ecosystem

- Seamless integration with RESTful API, Zapier, and Webhooks

Diffbot

Diffbot is another web scraping tool that enables users to identify pages automatically with the Analyze API feature and gather images, videos, products, articles, or discussions. It’s ideal for programmers, and IT companies whose use cases focus more on data analysis, including sentiments and natural language.

Features

- Fully-hosted SaaS

- Custom crawling controls

- JSON or CSV data formatting

- Seamless integration with Google Sheets, Excel, and Zapier

- Comprehensive Knowledge Graph, Datacenter Proxies, and Custom SLA

ParseHub

ParseHub is a free web scraping tool with more features than many other scrapers; for instance, you can scrape images, comments, pop-ups, download JSON and CSV files, etc. In addition, the tool offers free and custom enterprise plans for massive data extraction.

Features

- Automatic data extraction and storage on servers

- Offers API & webhooks for integration

- IP rotation and regular expressions

- Infinite scroll

Tips for Using a Web Scraper

Ensure that the scraper of choice is user-friendly, automated, and able to scrape multiple pages at once while reducing overhead. It should also be customizable and capable of extracting data from complex websites without manual intervention or supervision.

Before selecting any scraper tool, it’s important to check its compatibility with your website as well as any potential security risks or conflicts with existing server setup. Moreover, make sure that it allows you to access all types of content including HTML/XML files, JavaScript objects/files, CSS style sheets, images and video files among others.

Once an appropriate solution has been identified, be sure to test it thoroughly by running small batches before launching a full-scale deployment on larger datasets. Documenting all code snippets used will help maintain performance across various iterations and make troubleshooting any issues easier when needed. Also pay attention to how often websites are crawled so as not to overload servers or breach terms of service agreements in place or inadvertently blocked due to frequent requests made by automated bots within specific timeframes.

Finally, be sure that you have adequate storage capacity for all incoming data collected during your project so as not run out of storage quickly or lose important data due to lack of space on your hard drive. It can help generate valuable insight into consumer behavior as well provide competitive edge over other businesses but only when done properly ensuring maximum effectiveness for minimal effort invested in setup and maintenance activities over time..

Wrapping Up

In a nutshell, web scraping has become imperative for businesses since it eases the immensely tedious and time-taking process of continuously obtaining data from different sources. Several easy-to-use scrapers are available, like WebHarvy, which can be used with some programming skills to facilitate effortless scraping.